What is Big Data?

Big Data originally emerged as a term to describe large datasets that could

not be captured, stored, managed nor analysed using traditional databases.

However, the definition has broadened significantly over the years. Big Data now

not only refers to the data itself, but also the set of technologies that perform all

of the aforementioned functions as well as varied collections that solve complex

problems and make unlocking value from that data more economical.

“According to research, the use of

big data has improved the performance of businesses

by an average of 26%”

To be more specific Big data has the following characteristics stated below.

- It works with Petabytes/exabytesof data

- Involved million/billions of people

- Accumulate billions/trillions of records

- Flat schemas with a few complex interrelationships

- Involved time-stamped events most often

- Work out with incomplete data set

- Includes connections between data set those are probabilistically incidental

The structure of the big data can be explained by the following:

- Structured

- Semi-structured

- Unstructured

Structured:The structured mostly includes the traditional sources of information.

Semi-structured:The semi-structured includes many sources of the big data.

Unstructured:The unstructured includes the information like video data and audio data.

4 V’s of Big Data:

- Volume

- Variety

- Velocity

- Veracity

Volume: The volume is a characteristic that explains about the quantity of the information that is produced is very vital in the present context, it is the size of the data that explains the value and potential of the information and whether it can be viewed as a big data or not.

Variety: The next characteristic of big data is the variety and it determines the class to which the big data belongs to and it is also an essential factor that the data analyst should know.

Velocity: In the present context the term velocity refers to the speed of production of the information.

Veracity: The quality of the information being captured may vary a lot and the precision of analysis depends on the veracity factor of the source information.

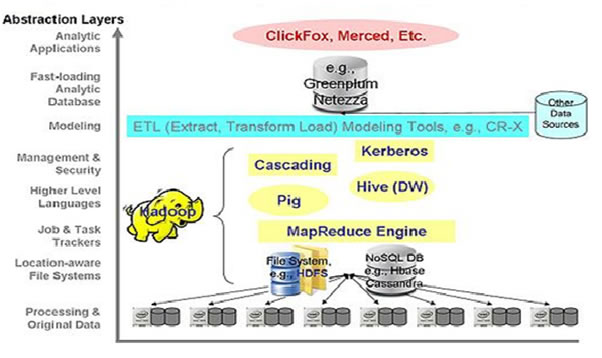

Components of Big Data:

- Hadoop packaging and support organizations likeCloudera;to include MapReduce-essentially the compute layer of big data.

- Any File system like Hadoop Distributed File System (HDFS), that manages the retrieval and storing of data and metadata required for computation. Databases such asHbase, can also be used.

- A higher level language such as Pig(part of Hadoop) can be used instead of using JAVA to simplify the writing of computations.

- A data warehouse layer named Hive is built on top of Hadoop

Why Big Data in Financial Services?

For financial institutions mining of big data provides a huge opportunity to stand out from the competition. The data landscape for financial institutions is changing fast. It is not enough to leverage institutional data. This has to be augmented with open data like social to enhance decision making. By using data science and machine learning to gather and analyse big data, financial institutions can reinvent their businesses. Financial Institutions are becoming aware of the potential of these technologies and are beginning to explore how data science and machine learning could enable them to streamline operations, improve product offerings, and enhance customer experiences.

Financial services companies generate and compile Exabyte’s of data a year, including structured data such as customer demographics and transaction history and also unstructured data such as customer behavior on websites and social media. Most of the financial organizations continue to lay down rules for credit risk and liquidity ratio levels, including regulatory acts such as AML, Basel III and FATCA that increase the amount of customer data available for analysis.

Also Financial institutions now have to filter through much more data to identify fraud. Analyzing traditional customer data is not enough as most customer interactions now occur through the Web, mobile apps and social media. To gain a competitive edge, financial services companies need to leverage big data to better comply with regulations, detect and prevent fraud, determine customer behavior, increase sales, develop data-driven products and much more.

Big data Use cases in Financial Services

Here are five of the most common use cases where banks and financial services firms are finding value in big data analytics.

1. Compliance and Regulatory Requirements

Financial services firms operate under a heavy regulatory framework, which requires significant levels of monitoring and reporting. The Dodd–Frank Act, enacted after the 2008 financial crisis, requires deal monitoring and documentation of the details of every trade. This data is used for trade surveillance that recognizes abnormal trading patterns.

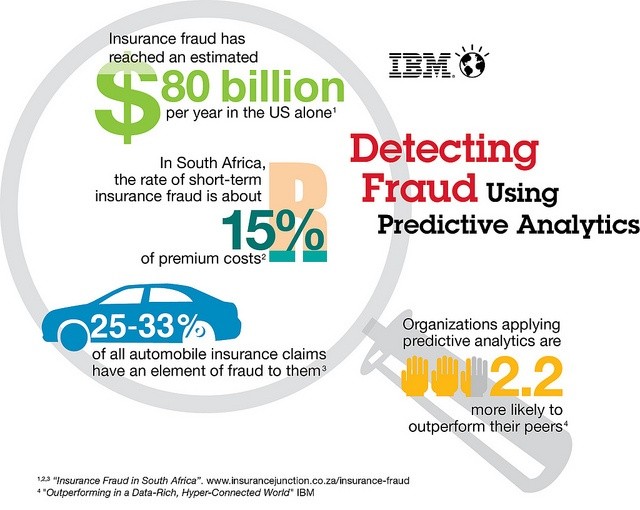

2. Fraud Detection

Banks and financial services firms use analytics to differentiate fraudulent interactions from legitimate business transactions. By applying analytics and machine learning, they are able to define normal activity based on a customer’s history and distinguish it from unusual behavior indicating fraud. The analysis systems suggest immediate actions, such as blocking irregular transactions, which stops fraud before it occurs and improves profitability.

3. Customer Segmentation

Banks have been under pressure to change from product-centric to customer-centric businesses. One way to achieve that transformation is to better understand their customers through segmentation. Big data enables them to group customers into distinct segments, which are defined by data sets that may include customer demographics, daily transactions, interactions with online and telephone customer service systems, and external data, such as the value of their homes. Promotions and marketing campaigns are then targeted to customers according to their segments.

“Paves the way for a fast and more

transparent reconciliation”

4. Personalized Marketing

One step beyond segment-based marketing is personalized marketing, which targets customers based on understanding of their individual buying habits. While it’s supported by big data analysis of merchant records, financial services firms can also incorporate unstructured data from their customers’ social media profiles in order to create a fuller picture of the customers’ needs through customer sentiment analysis. Once those needs are understood, big data analysis can create a credit risk assessment in order to decide whether or not to go ahead with a transaction.

5. Risk Management

While every business needs to engage in risk management, the need may be largest in the financial industry. Regulatory schemes such as Basel III require firms to manage their market liquidity risk through stress testing. Financial firms also manage their customer risk through analysis of complete customer portfolios. The risks of algorithmic trading are managed through back testing strategies against historical data. Big data analysis can also support real-time alerting if a risk threshold is surpassed.

“A reduction in paperwork

and improved process automation”

Big Data in JP Morgan chase

JP Morgan Chase generates a vast amount of credit card information and other transactional data about its US-based customers. Along with publicly available economic statistics from the US government, JPMorgan Chase uses new analytic capabilities to develop proprietary insights into consumer trends, and in turn offers those reports to the bank’s clients. The Big Data analytic technology has allowed the bank to break down the consumer market into smaller segments, even into single individuals, and for reports to be generated in seconds.

JP Morgan Chase analyses emails, phone calls, transaction data to detect the possibilities of frauds which would otherwise be difficult to detect.JP Morgan uses Analytics software developed by Palantir to keep a track of employee communications to identify any indications of internal fraud.

Big data in Credit Suisse

Credit Suisse now has visibility into the different relationships a client has with Credit Suisse, allowing international client assessments to be made 80 percent faster compared to last year. Potential clients with a government connection –so-called ‘politically exposed persons’ –are assessed approximately 60 percent faster at approximately 40 percent lower costs.

Credit Suisse has been deploying software “robots” to carry out certain repetitive compliance tasks. One of them, dubbed “James the Robot”, is used for suitability and appropriateness checks –to ensure clients are invested in the appropriate products. James conducts these checks 200 times faster than when they were done manually. The big increase in the number of checks performed represents a significant reduction in risk for the bank.

Big data in CITI

Citi, for its part, is also experimenting with new ways of offering commercial customers transaction data aggregated from its global customer base, which can be used to identify new trade patterns. The data could, for example, reveal indicators of what might be the next big cities in the emerging markets. According to an executive, who manages internal operations and technology at Citi, the bank shared such information with a large Spanish clothing company, which was able to determine where to open a new manufacturing facility and several new outlets.

“Strategically designing and

optimising their treasury

business”

Big data in Bank of America

Bank of America (BoA) used the analytic capabilities of Big Data to understand why many of its commercial customers were defecting to smaller banks. It used to offer an end-to-end cash management portal that proved to be too rigid for customers wanting the freedom to access ancillary cash management services from other financial services firms. They discovered smaller banks could deliver more modular solutions. BoA used data obtained from customer behavior on its own website, as well as from call center logs and transcripts of one-on-one customer interviews to ascertain why it was losing customers. In the end, it dropped the all-in-one offering and launched, in 2009, a more flexible online product: Cash Pro Online, and a mobile version, Cash Pro Mobile in 2010.

Big data in Deutsche Bank

Deutsche Bank understands the transformational potential of Big Data and has been well ahead of the curve, making significant investments across all areas of the Bank. Deutsche Bank currently has multiple production Hadoop platforms available through Open Source, enabling a decreased cost in terms of data processing.

Big Blind Spots

Even the most diligently-unbiased archives are constrained by the availability

of materials and fluency of its search. When it comes to Big Data, we know

only what we have recorded, and we record what we can knowingly capture

– it is the bias towards measurable information that affects arenas of science

that could contribute to misleading results.

Since we are inevitably hampered by the unknown, it is critical to qualify

the importance of the known and be aware that we might be dealing with

informational biases and reconfigure our research and results to account for

both present and absent information.

Conclusion

Overall, 62% of banks believe that managing and analysing big data is critical

to their success. However, only 29% report that they are currently extracting

enough commercial value from data. This means there is significant upside,

and it may be some time before Big Data becomes part of the standard

business dynamic. The technical key to successful usage of Big Data and

digitization of business processes is the ability of the organisation to collect

and process all the required data, and to inject this data into its business

processes in real-time – or more accurately, in right-time..

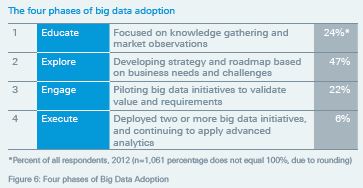

Therefore, rather than jump into Big Data blindly, a more pragmatic approach

would be to test the water first, prioritize investments and use this process to

determine how prepared the organisation is for a Big Data transformation, as well

as how fast and how deep it can go. Ask the right questions, focus on business

problems and, above all, consider multiple insights to avoid any Big Data traps or

pitfalls.